bedrock-wrapper v2.3.0

🪨 Bedrock Wrapper

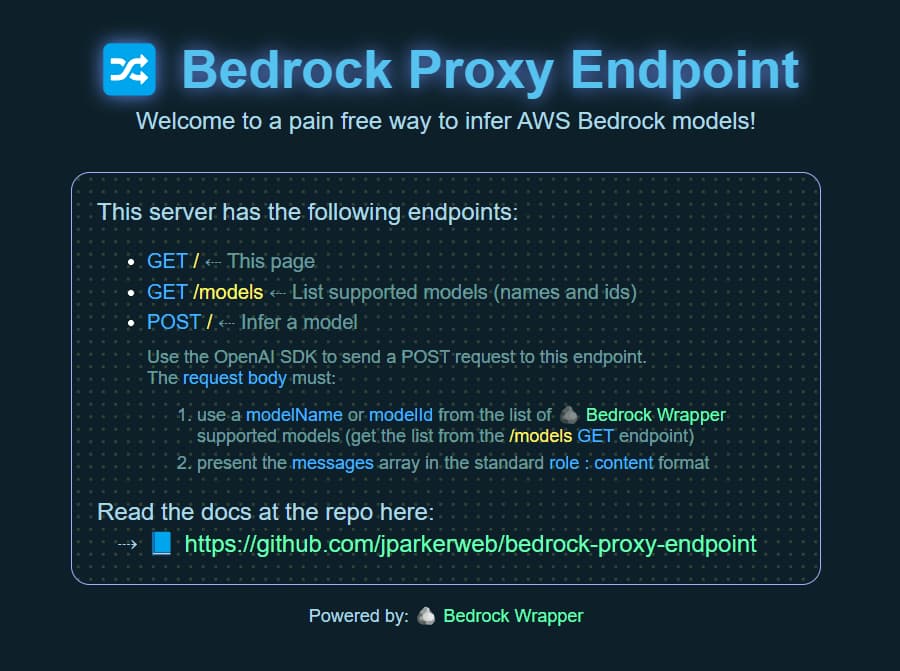

Bedrock Wrapper is an npm package that simplifies the integration of existing OpenAI-compatible API objects with AWS Bedrock's serverless inference LLMs. Follow the steps below to integrate into your own application, or alternativly use the 🔀 Bedrock Proxy Endpoint project to spin up your own custom OpenAI server endpoint for even easier inference (using the standard baseUrl, and apiKey params).

Maintained by

Install

- install package:

npm install bedrock-wrapper

Usage

import

bedrockWrapperimport { bedrockWrapper } from "bedrock-wrapper";create an

awsCredsobject and fill in your AWS credentialsconst awsCreds = { region: AWS_REGION, accessKeyId: AWS_ACCESS_KEY_ID, secretAccessKey: AWS_SECRET_ACCESS_KEY, };clone your openai chat completions object into

openaiChatCompletionsCreateObjector create a new one and edit the valuesconst openaiChatCompletionsCreateObject = { "messages": messages, "model": "Llama-3-1-8b", "max_tokens": LLM_MAX_GEN_TOKENS, "stream": true, "temperature": LLM_TEMPERATURE, "top_p": LLM_TOP_P, };the

messagesvariable should be in openai's role/content formatmessages = [ { role: "system", content: "You are a helpful AI assistant that follows instructions extremely well. Answer the user questions accurately. Think step by step before answering the question. You will get a $100 tip if you provide the correct answer.", }, { role: "user", content: "Describe why openai api standard used by lots of serverless LLM api providers is better than aws bedrock invoke api offered by aws bedrock. Limit your response to five sentences.", }, { role: "assistant", content: "", }, ]the

modelvalue should be the correspondingmodelNamevalue in thebedrock_modelssection below (see Supported Models below)call the

bedrockWrapperfunction and pass in the previously definedawsCredsandopenaiChatCompletionsCreateObjectobjects// create a variable to hold the complete response let completeResponse = ""; // invoke the streamed bedrock api response for await (const chunk of bedrockWrapper(awsCreds, openaiChatCompletionsCreateObject)) { completeResponse += chunk; // --------------------------------------------------- // -- each chunk is streamed as it is received here -- // --------------------------------------------------- process.stdout.write(chunk); // ⇠ do stuff with the streamed chunk } // console.log(`\n\completeResponse:\n${completeResponse}\n`); // ⇠ optional do stuff with the complete response returned from the API reguardless of stream or notif calling the unstreamed version you can call bedrockWrapper like this

// create a variable to hold the complete response let completeResponse = ""; if (!openaiChatCompletionsCreateObject.stream){ // invoke the unstreamed bedrock api response const response = await bedrockWrapper(awsCreds, openaiChatCompletionsCreateObject); for await (const data of response) { completeResponse += data; } // ---------------------------------------------------- // -- unstreamed complete response is available here -- // ---------------------------------------------------- console.log(`\n\completeResponse:\n${completeResponse}\n`); // ⇠ do stuff with the complete response }

Supported Models

| modelName | AWS Model Id | Image |

|---|---|---|

| Claude-3-7-Sonnet-Thinking | us.anthropic.claude-3-7-sonnet-20250219-v1:0 | ✅ |

| Claude-3-7-Sonnet | us.anthropic.claude-3-7-sonnet-20250219-v1:0 | ✅ |

| Claude-3-5-Sonnet-v2 | anthropic.claude-3-5-sonnet-20241022-v2:0 | ✅ |

| Claude-3-5-Sonnet | anthropic.claude-3-5-sonnet-20240620-v1:0 | ✅ |

| Claude-3-5-Haiku | anthropic.claude-3-5-haiku-20241022-v1:0 | ❌ |

| Claude-3-Haiku | anthropic.claude-3-haiku-20240307-v1:0 | ❌ |

| Llama-3-3-70b | us.meta.llama3-3-70b-instruct-v1:0 | ❌ |

| Llama-3-2-1b | us.meta.llama3-2-1b-instruct-v1:0 | ❌ |

| Llama-3-2-3b | us.meta.llama3-2-3b-instruct-v1:0 | ❌ |

| Llama-3-2-11b | us.meta.llama3-2-11b-instruct-v1:0 | ❌ |

| Llama-3-2-90b | us.meta.llama3-2-90b-instruct-v1:0 | ❌ |

| Llama-3-1-8b | meta.llama3-1-8b-instruct-v1:0 | ❌ |

| Llama-3-1-70b | meta.llama3-1-70b-instruct-v1:0 | ❌ |

| Llama-3-1-405b | meta.llama3-1-405b-instruct-v1:0 | ❌ |

| Llama-3-8b | meta.llama3-8b-instruct-v1:0 | ❌ |

| Llama-3-70b | meta.llama3-70b-instruct-v1:0 | ❌ |

| Mistral-7b | mistral.mistral-7b-instruct-v0:2 | ❌ |

| Mixtral-8x7b | mistral.mixtral-8x7b-instruct-v0:1 | ❌ |

| Mistral-Large | mistral.mistral-large-2402-v1:0 | ❌ |

To return the list progrmatically you can import and call listBedrockWrapperSupportedModels:

import { listBedrockWrapperSupportedModels } from 'bedrock-wrapper';

console.log(`\nsupported models:\n${JSON.stringify(await listBedrockWrapperSupportedModels())}\n`);Additional Bedrock model support can be added.

Please modify the bedrock_models.js file and submit a PR 🏆 or create an Issue.

Image Support

For models with image support (Claude 3.5 Sonnet, Claude 3.7 Sonnet, and Claude 3.7 Sonnet Thinking), you can include images in your messages using the following format:

messages = [

{

role: "system",

content: "You are a helpful AI assistant that can analyze images.",

},

{

role: "user",

content: [

{ type: "text", text: "What's in this image?" },

{

type: "image_url",

image_url: {

url: "data:image/jpeg;base64,/9j/4AAQSkZJRgABAQEA..." // base64 encoded image

}

}

]

}

]You can also use a direct URL to an image instead of base64 encoding:

messages = [

{

role: "user",

content: [

{ type: "text", text: "Describe this image in detail." },

{

type: "image_url",

image_url: {

url: "https://example.com/path/to/image.jpg" // direct URL to image

}

}

]

}

]You can include multiple images in a single message by adding more image_url objects to the content array.

📢 P.S.

In case you missed it at the beginning of this doc, for an even easier setup, use the 🔀 Bedrock Proxy Endpoint project to spin up your own custom OpenAI server endpoint (using the standard baseUrl, and apiKey params).

📚 References

- AWS Meta Llama Models User Guide

- AWS Mistral Models User Guide

- OpenAI API

- AWS Bedrock

- AWS SDK for JavaScript

Please consider sending me a tip to support my work 😀