daq-proc v8.0.0

daq-proc

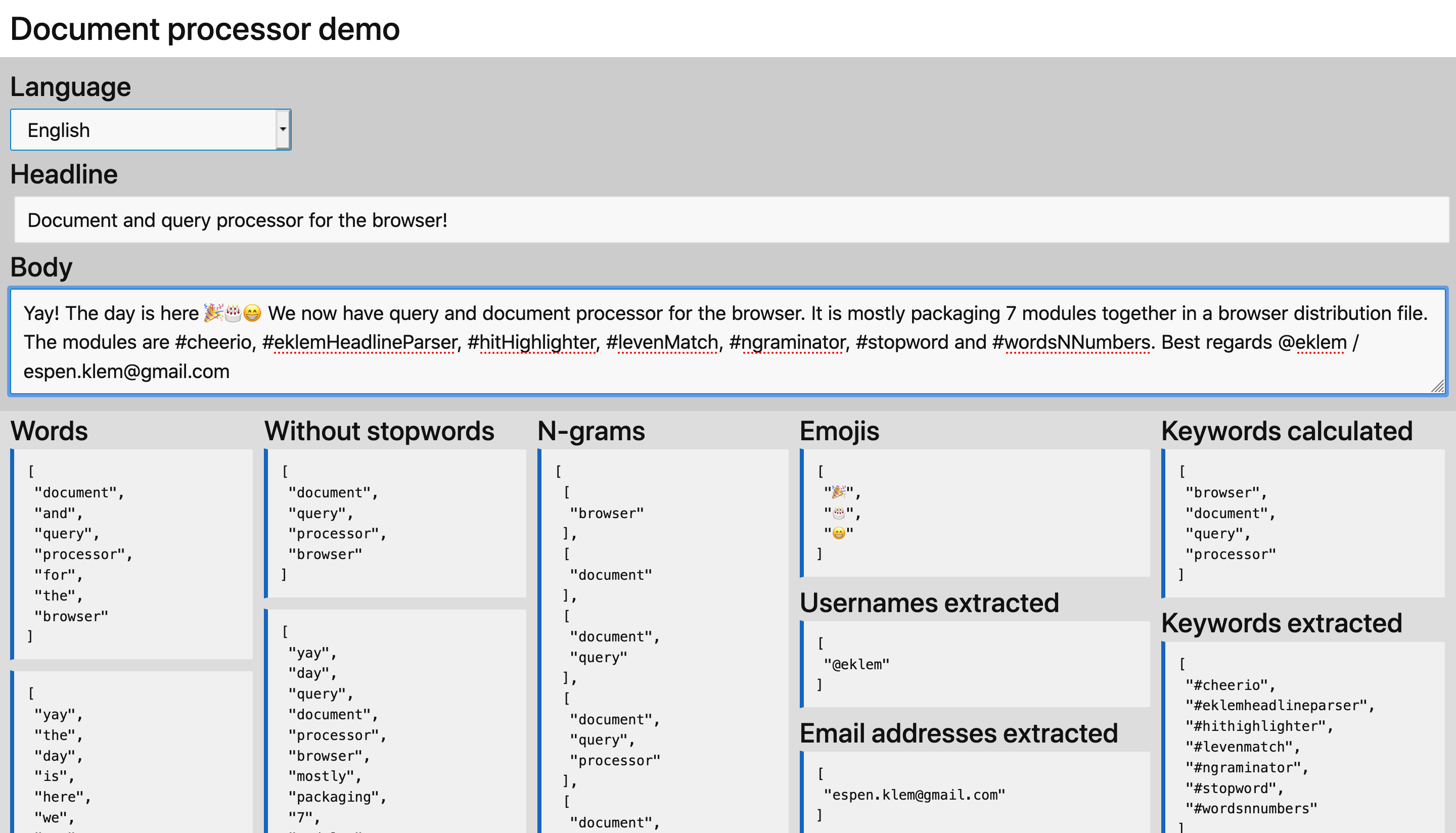

Simple document and query processor to makes search running in the browser and node.js a little better. Removes stopwords (smaller index and less irrelevant hits), extract keywords to filter on and prepares ngrams for auto-complete functionality.

Demo

- document processor. It showcases the document processor end. Just add some words and figure it out.

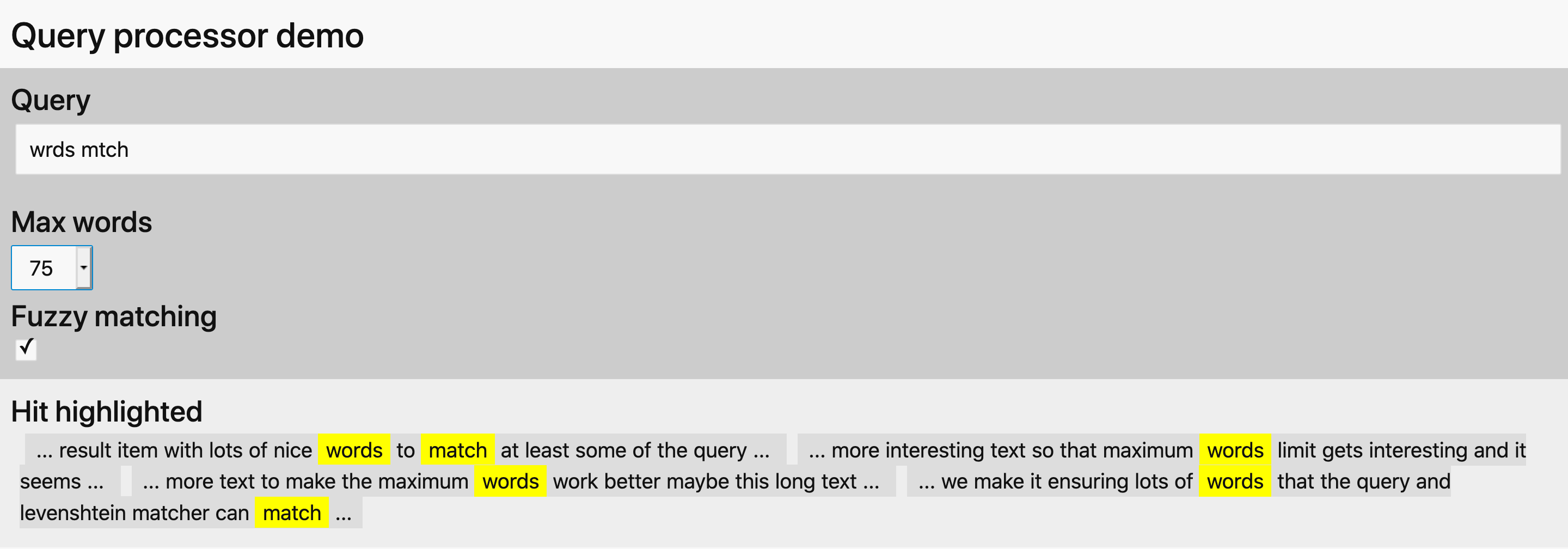

- query processor. Showcases hit highlighting and truncating text if needed. Possible to turn fuzzy matching on/off.

This library is not creating anything new, but just packaging 6 libraries that goes well togehter into one browser distribution file. Also showing how it may be usefull through tests and the interactive demo.

Libraries that daq-proc is depending on

cheerio- Here specifically used to extract text from all- or parts of some HTML.eklem-headline-parser- Determines the most relevant keywords in a headline by considering article contexthit-highlighter- Higlighting hits from a query in a result item.leven-match- Calculating Levenshtein match between words in two arrays within given distance. Good for fuzzy matching.ngraminator- Generate n-grams.stopword- Removes stopwords from an array of words. To keep your index small and remove all words without a scent of information and/or remove stopwords from the query, making the search engine work less hard to find relevant results.words'n'numbers- Extract words and optionally numbers from a string of text into arrays. Arrays that can be fed tostopword,eklem-headline-parser,leven-match,ngraminatorandhit-highlighter.

Browser

Example - document processing side

<script src="https://cdn.jsdelivr.net/npm/daq-proc/dist/daq-proc.umd.min.js"></script>

<script>

// exposing the underlying libraries in a transparent way

const {

load,

removeStopwords, _123, afr, ara, hye, eus, ben, bre, bul, cat, zho, hrv, ces, dan, nld, eng, epo, est, fin, fra, glg, deu, ell, guj, hau, heb, hin, hun, ind, gle, ita, jpn, kor, kur, lat, lav, lit, lgg, lggNd, msa, mar, mya, nob, fas, pol, por, porBr, panGu, ron, rus, slk, slv, som, sot, spa, swa, swe, tha, tgl, tur, urd, ukr, vie, yor, zul,

extract, words, numbers, emojis, tags, usernames, email,

ngraminator,

findKeywords,

highlight,

levenMatch

} = dqp

// input

const headlineString = 'Document and query processing for the browser!'

const bodyString = 'Yay! The day is here =) We now have document and query processing for the browser. It is mostly packaging 4 modules together in a browser distribution file. The modules are words-n-numbers, stopword, ngraminator and eklem-headline-parser'

// extracting word arrays

let headlineArray = extract(headlineString, {regex: [words, numbers], toLowercase: true})

let bodyArray = extract(bodyString, {regex: [words, numbers], toLowercase: true})

console.log('Word arrays: ')

console.dir(headlineArray)

console.dir(bodyArray)

// removing stopwords

let headlineStopped = removeStopwords(headlineArray)

let bodyStopped = removeStopwords(bodyArray)

console.log('Stopword removed arrays: ')

console.dir(headlineStopped)

console.dir(bodyStopped)

// n-grams

let headlineNgrams = ngraminator(headlineStopped, [2,3,4])

let bodyNgrams = ngraminator(bodyStopped, [2,3,4])

console.log('Ngram arrays: ')

console.dir(headlineNgrams)

console.dir(bodyNgrams)

// calculating important keywords

let keywords = findKeywords(headlineStopped, bodyStopped, 5)

console.log('Keyword array: ')

console.dir(keywords)

</script>Example - Query side

<script src="https://cdn.jsdelivr.net/npm/daq-proc/dist/daq-proc.umd.min.js"></script>

<script>

// exposing the underlying libraries in a transparent way

const {

highlight,

levenMatch

} = dqp

const query = ['interesting', 'words']

const searchResult = ['some', 'interesting', 'words', 'to', 'remember']

highlight(query, searchResult)

// returns:

// 'some <span class="highlighted">interesting words</span> to remember'

const index = ['return', 'all', 'word', 'matches', 'between', 'two', 'arrays', 'within', 'given', 'levenshtein', 'distance', 'intended', 'use', 'is', 'to', 'words', 'in', 'a', 'query', 'that', 'has', 'an', 'index', 'good', 'for', 'autocomplete', 'type', 'functionality,', 'and', 'some', 'cases', 'also', 'searching']

const query = ['qvery', 'words', 'levensthein']

levenMatch(query, index, {distance: 2})

// returns:

//[ [ 'query' ], [ 'word', 'words' ], [ 'levenshtein' ] ]

</script>Node.js

It's fully possible to use on Node.js too. The tests are both for Node.js and the browser. It's only wrapping 6 libraries for the ease of use in the browser, but could come in handy for i.e. simple crawler scenarios.

Something missing?

3 years ago

3 years ago

3 years ago

3 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

7 years ago

7 years ago

7 years ago

7 years ago