rdb v3.10.1

RDB is the ultimate Object Relational Mapper for Node.js and Typescript, offering seamless integration with a variety of popular databases. Whether you're building applications in TypeScript or JavaScript (including both CommonJS and ECMAScript), RDB has got you covered.

Key Features

- Rich Querying Model: RDB provides a powerful and intuitive querying model, making it easy to retrieve, filter, and manipulate data from your databases.

- Active Record: With a concise and expressive syntax, RDB enables you to interact with your database using the Active Record Pattern.

- No Code Generation Required: Enjoy full IntelliSense, even in table mappings, without the need for cumbersome code generation.

- TypeScript and JavaScript Support: RDB fully supports both TypeScript and JavaScript, allowing you to leverage the benefits of static typing and modern ECMAScript features.

- Works in the Browser: You can securely use RDB in the browser by utilizing the Express.js plugin, which serves to safeguard sensitive database credentials from exposure at the client level. This method mirrors a traditional REST API, augmented with advanced TypeScript tooling for enhanced functionality.

Supported Databases

✅ Postgres

✅ MS SQL

✅ MySQL

✅ Oracle

✅ SAP ASE

✅ SQLite

This is the Modern Typescript Documentation. Are you looking for the Classic Documentation ?

Sponsorship ♡

If you value the hard work behind RDB and wish to see it evolve further, consider sponsoring. Your support fuels the journey of refining and expanding this tool for our developer community.

Installation

$ npm install rdbExample

Watch the tutorial video on YouTube

Here we choose SQLite.

$ npm install sqlite3📄 map.ts

import rdb from 'rdb';

const map = rdb.map(x => ({

customer: x.table('customer').map(({ column }) => ({

id: column('id').numeric().primary().notNullExceptInsert(),

name: column('name').string(),

balance: column('balance').numeric(),

isActive: column('isActive').boolean(),

})),

order: x.table('_order').map(({ column }) => ({

id: column('id').numeric().primary().notNullExceptInsert(),

orderDate: column('orderDate').date().notNull(),

customerId: column('customerId').numeric().notNullExceptInsert(),

})),

orderLine: x.table('orderLine').map(({ column }) => ({

id: column('id').numeric().primary(),

orderId: column('orderId').numeric(),

product: column('product').string(),

amount: column('amount').numeric(),

})),

deliveryAddress: x.table('deliveryAddress').map(({ column }) => ({

id: column('id').numeric().primary(),

orderId: column('orderId').numeric(),

name: column('name').string(),

street: column('street').string(),

postalCode: column('postalCode').string(),

postalPlace: column('postalPlace').string(),

countryCode: column('countryCode').string(),

}))

})).map(x => ({

order: x.order.map(v => ({

customer: v.references(x.customer).by('customerId'),

lines: v.hasMany(x.orderLine).by('orderId'),

deliveryAddress: v.hasOne(x.deliveryAddress).by('orderId'),

}))

}));

export default map;📄 update.ts

import map from './map';

const db = map.sqlite('demo.db');

updateRow();

async function updateRow() {

const order = await db.order.getById(2, {

lines: true

});

order.lines.push({

product: 'broomstick',

amount: 300

});

await order.saveChanges();

}📄 filter.ts

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const orders = await db.order.getAll({

where: x => x.lines.any(line => line.product.contains('broomstick'))

.and(db.order.customer.name.startsWith('Harry')),

lines: true,

deliveryAddress: true,

customer: true

});

}API

Each column within your database table is designated by using the column() method, in which you specify its name. This action generates a reference to a column object that enables you to articulate further column properties like its data type or if it serves as a primary key.

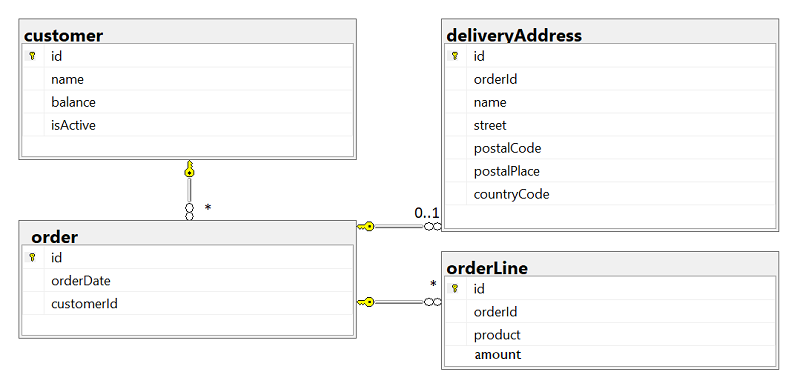

Relationships between tables can also be outlined. By using methods like hasOne, hasMany, and references, you can establish connections that reflect the relationships in your data schema. In the example below, an 'order' is linked to a 'customer' reference, a 'deliveryAddress', and multiple 'lines'. The hasMany and hasOne relations represents ownership - the tables 'deliveryAddress' and 'orderLine' are owned by the 'order' table, and therefore, they contain the 'orderId' column referring to their parent table, which is 'order'. Conversely, the customer table is independent and can exist without any knowledge of the 'order' table. Therefore we say that the order table references the customer table - necessitating the existence of a 'customerId' column in the 'order' table.

📄 map.ts

import rdb from 'rdb';

const map = rdb.map(x => ({

customer: x.table('customer').map(({ column }) => ({

id: column('id').numeric().primary().notNullExceptInsert(),

name: column('name').string(),

balance: column('balance').numeric(),

isActive: column('isActive').boolean(),

})),

order: x.table('_order').map(({ column }) => ({

id: column('id').numeric().primary().notNullExceptInsert(),

orderDate: column('orderDate').date().notNull(),

customerId: column('customerId').numeric().notNullExceptInsert(),

})),

orderLine: x.table('orderLine').map(({ column }) => ({

id: column('id').numeric().primary(),

orderId: column('orderId').numeric(),

product: column('product').string(),

})),

deliveryAddress: x.table('deliveryAddress').map(({ column }) => ({

id: column('id').numeric().primary(),

orderId: column('orderId').numeric(),

name: column('name').string(),

street: column('street').string(),

postalCode: column('postalCode').string(),

postalPlace: column('postalPlace').string(),

countryCode: column('countryCode').string(),

}))

})).map(x => ({

order: x.order.map(({ hasOne, hasMany, references }) => ({

customer: references(x.customer).by('customerId'),

deliveryAddress: hasOne(x.deliveryAddress).by('orderId'),

lines: hasMany(x.orderLine).by('orderId')

}))

}));

export default map;The init.ts script resets our SQLite database. It's worth noting that SQLite databases are represented as single files, which makes them wonderfully straightforward to manage.

At the start of the script, we import our database mapping from the map.ts file. This gives us access to the db object, which we'll use to interact with our SQLite database.

Then, we define a SQL string. This string outlines the structure of our SQLite database. It first specifies to drop existing tables named 'deliveryAddress', 'orderLine', '_order', and 'customer' if they exist. This ensures we have a clean slate. Then, it dictates how to create these tables anew with the necessary columns and constraints.

Because of a peculiarity in SQLite, which only allows one statement execution at a time, we split this SQL string into separate statements. We do this using the split() method, which breaks up the string at every semicolon.

📄 init.ts

import map from './map';

const db = map.sqlite('demo.db');

const sql = `DROP TABLE IF EXISTS deliveryAddress; DROP TABLE IF EXISTS orderLine; DROP TABLE IF EXISTS _order; DROP TABLE IF EXISTS customer;

CREATE TABLE customer (

id INTEGER PRIMARY KEY,

name TEXT,

balance NUMERIC,

isActive INTEGER

);

CREATE TABLE _order (

id INTEGER PRIMARY KEY,

orderDate TEXT,

customerId INTEGER REFERENCES customer

);

CREATE TABLE orderLine (

id INTEGER PRIMARY KEY,

orderId INTEGER REFERENCES _order,

product TEXT,

amount NUMERIC(10,2)

);

CREATE TABLE deliveryAddress (

id INTEGER PRIMARY KEY,

orderId INTEGER REFERENCES _order,

name TEXT,

street TEXT,

postalCode TEXT,

postalPlace TEXT,

countryCode TEXT

)

`;

async function init() {

const statements = sql.split(';');

for (let i = 0; i < statements.length; i++) {

await db.query(statements[i]);

}

}

export default init;In SQLite, columns with the INTEGER PRIMARY KEY attribute are designed to autoincrement by default. This means that each time a new record is inserted into the table, SQLite automatically produces a numeric key for the id column that is one greater than the largest existing key. This mechanism is particularly handy when you want to create unique identifiers for your table rows without manually entering each id.

SQLite

$ npm install sqlite3import map from './map';

const db = map.sqlite('demo.db');With connection pool

$ npm install sqlite3import map from './map';

const db = map.sqlite('demo.db', { size: 10 });From the browser

You can securely use RDB from the browser by utilizing the Express plugin, which serves to safeguard sensitive database credentials from exposure at the client level. This technique bypasses the need to transmit raw SQL queries directly from the client to the server. Instead, it logs method calls initiated by the client, which are later replayed and authenticated on the server. This not only reinforces security by preventing the disclosure of raw SQL queries on the client side but also facilitates a smoother operation. Essentially, this method mirrors a traditional REST API, augmented with advanced TypeScript tooling for enhanced functionality. You can read more about it in the section called In the browser

📄 server.ts

import map from './map';

import { json } from 'body-parser';

import express from 'express';

import cors from 'cors';

const db = map.sqlite('demo.db');

express().disable('x-powered-by')

.use(json({ limit: '100mb' }))

.use(cors())

//for demonstrational purposes, authentication middleware is not shown here.

.use('/rdb', db.express())

.listen(3000, () => console.log('Example app listening on port 3000!'));📄 browser.ts

import map from './map';

const db = map.http('http://localhost:3000/rdb');MySQL

$ npm install mysql2import map from './map';

const db = map.mysql('mysql://test:test@mysql/test');MS SQL

$ npm install tediousimport map from './map';

const db = map.mssql({

server: 'mssql',

options: {

encrypt: false,

database: 'test'

},

authentication: {

type: 'default',

options: {

userName: 'sa',

password: 'P@assword123',

}

}

});PostgreSQL

$ npm install pgimport map from './map';

const db = map.pg('postgres://postgres:postgres@postgres/postgres');Oracle

$ npm install oracledbimport map from './map';

const db = map.oracle({

user: 'sys',

password: 'P@assword123',

connectString: 'oracle/XE',

privilege: 2

});SAP Adaptive Server

Even though msnodesqlv8 was developed for MS SQL, it also works for SAP ASE as it is ODBC compliant.

$ npm install msnodesqlv8import { fileURLToPath } from 'url';

import { dirname } from 'path';

import map from './map';

const __filename = fileURLToPath(import.meta.url);

const __dirname = dirname(__filename);

//download odbc driver from sap web pages

const db = map.sap(`Driver=${__dirname}/libsybdrvodb.so;SERVER=sapase;Port=5000;UID=sa;PWD=sybase;DATABASE=test`);Next, we insert an array of two orders in the order table. Each order contains an orderDate, customer information, deliveryAddress, and lines for the order items. We use the customer constants "george" and "harry" from previous inserts. Observe that we don't pass in any primary keys. This is because all tables here have autoincremental keys. The second argument to "db.order.insert" specifies a fetching strategy. This fetching strategy plays a critical role in determining the depth of the data retrieved from the database after insertion. The fetching strategy specifies which associated data should be retrieved and included in the resulting orders object. In this case, the fetching strategy instructs the database to retrieve the customer, deliveryAddress, and lines for each order.

Without a fetching strategy, "db.order.insert" would only return the root level of each order. In that case you would only get the id, orderDate, and customerId for each order.

import map from './map';

const db = map.sqlite('demo.db');

import init from './init';

insertRows();

async function insertRows() {

await init();

const george = await db.customer.insert({

name: 'George',

balance: 177,

isActive: true

});

const harry = await db.customer.insert({

name: 'Harry',

balance: 200,

isActive: true

});

const orders = await db.order.insert([

{

orderDate: new Date(2022, 0, 11, 9, 24, 47),

customer: george,

deliveryAddress: {

name: 'George',

street: 'Node street 1',

postalCode: '7059',

postalPlace: 'Jakobsli',

countryCode: 'NO'

},

lines: [

{ product: 'Bicycle', amount: 250 },

{ product: 'Small guitar', amount: 150 }

]

},

{

customer: harry,

orderDate: new Date(2021, 0, 11, 12, 22, 45),

deliveryAddress: {

name: 'Harry Potter',

street: '4 Privet Drive, Little Whinging',

postalCode: 'GU4',

postalPlace: 'Surrey',

countryCode: 'UK'

},

lines: [

{ product: 'Magic wand', amount: 300 }

]

}

], {customer: true, deliveryAddress: true, lines: true}); //fetching strategy

}Conflict resolution

By default, the strategy for inserting rows is set to an optimistic approach. In this case, if a row is being inserted with an already existing primary key, the database raises an exception.

Currently, there are three concurrency strategies:

optimisticRaises an exception if another row was already inserted on that primary key.overwriteOverwrites the property, regardless of changes by others.skipOnConflictSilently avoids updating the property if another user has modified it in the interim.

The concurrency option can be set either for the whole table or individually for each column. In the example below, we've set the concurrency strategy on vendor table to overwrite except for the column balance which uses the skipOnConflict strategy. In this particular case, a row with id: 1 already exists, the name and isActive fields will be overwritten, but the balance will remain the same as in the original record, demonstrating the effectiveness of combining multiple concurrency strategies.

import map from './map';

const db = map.sqlite('demo.db');

insertRows();

async function insertRows() {

db2 = db({

vendor: {

balance: {

concurrency: 'skipOnConflict'

},

concurrency: 'overwrite'

}

});

await db2.vendor.insert({

id: 1,

name: 'John',

balance: 100,

isActive: true

});

//this will overwrite all fields but balance

const george = await db2.vendor.insert({

id: 1,

name: 'George',

balance: 177,

isActive: false

});

console.dir(george, {depth: Infinity});

// {

// id: 1,

// name: 'George',

// balance: 100,

// isActive: false

// }

}The fetching strategy in RDB is optional, and its use is influenced by your specific needs. You can define the fetching strategy either on the table level or the column level. This granularity gives you the freedom to decide how much related data you want to pull along with your primary request.

All rows

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const orders = await db.order.getAll({

customer: true,

deliveryAddress: true,

lines: true

});

}Limit, offset and order by

This script demonstrates how to fetch orders with customer, lines and deliveryAddress, limiting the results to 10, skipping the first row, and sorting the data based on the orderDate in descending order followed by id. The lines are sorted by product.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const orders = await db.order.getAll({

offset: 1,

orderBy: ['orderDate desc', 'id'],

limit: 10,

customer: true,

deliveryAddress: true,

lines: {

orderBy: 'product'

},

});

}

With aggregated results

You can count records and aggregate numerical columns.

The following operators are supported:

- count

- sum

- min

- max

- avg

You can also elevate associated data to a parent level for easier access. In the example below, balance of the customer is elevated to the root level.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const orders = await db.order.getAll({

numberOfLines: x => x.count(x => x.lines.id),

totalAmount: x => x.sum(x => lines.amount),

balance: x => x.customer.balance

});

}Many rows filtered

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const orders = await db.order.getAll({

where: x => x.lines.any(line => line.product.contains('i'))

.and(x.customer.balance.greaterThan(180)),

customer: true,

deliveryAddress: true,

lines: true

});

}You can also use the alternative syntax for the where-filter. This way, the filter can be constructed independently from the fetching strategy. Keep in mind that you must use the getMany method instead of the getAll method.

It is also possible to combine where-filter with the independent filter when using the getMany method.

async function getRows() {

const filter = db.order.lines.any(line => line.product.contains('i'))

.and(db.order.customer.balance.greaterThan(180));

const orders = await db.order.getMany(filter, {

//where: x => ... can be combined as well

customer: true,

deliveryAddress: true,

lines: true

});

}Single row filtered

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const order = await db.order.getOne(undefined /* optional filter */, {

where: x => x.order.customer(customer => customer.isActive.eq(true)

.and(customer.startsWith('Harr'))),

customer: true,

deliveryAddress: true,

lines: true

});

}You can use also the alternative syntax for the where-filter. This way, the filter can be constructed independently from the fetching strategy.

It is also possible to combine where-filter with the independent filter when using the getOne method.

async function getRows() {

const filter = db.order.customer(customer => customer.isActive.eq(true)

.and(customer.startsWith('Harr')));

//equivalent, but creates slighly different sql:

// const filter = db.order.customer.isActive.eq(true).and(db.order.customer.startsWith('Harr'));

const order = await db.order.getOne(filter, {

customer: true,

deliveryAddress: true,

lines: true

});

}__Single row by primary key__

```javascript

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const order = await db.order.getById(1, {

customer: true,

deliveryAddress: true,

lines: true

});

}Many rows by primary key

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const orders = await db.order.getMany([

{id: 1},

{id: 2}

],

{

customer: true,

deliveryAddress: true,

lines: true

});

}Updating a single row

import map from './map';

const db = map.sqlite('demo.db');

update();

async function update() {

const order = await db.order.getById(1, {

customer: true,

deliveryAddress: true,

lines: true

});

order.orderDate = new Date();

order.deliveryAddress = null;

order.lines.push({product: 'Cloak of invisibility', amount: 600});

await order.saveChanges();

}Updating many rows

import map from './map';

const db = map.sqlite('demo.db');

update();

async function update() {

let orders = await db.order.getAll({

orderBy: 'id',

lines: true,

deliveryAddress: true,

customer: true

});

orders[0].orderDate = new Date();

orders[0].deliveryAddress.street = 'Node street 2';

orders[0].lines[1].product = 'Big guitar';

orders[1].orderDate = '2023-07-14T12:00:00'; //iso-string is allowed

orders[1].deliveryAddress = null;

orders[1].customer = null;

orders[1].lines.push({product: 'Cloak of invisibility', amount: 600});

await orders.saveChanges();

}Updating from JSON

The update method is suitable when a complete overwrite is required from a JSON object - typically in a REST API. However, it's important to consider that this method replaces the entire row and it's children, which might not always be desirable in a multi-user environment.

import map from './map';

const db = map.sqlite('demo.db');

update();

async function update() {

const modified = {

orderDate: '2023-07-14T12:00:00',

customer: {

id: 2

},

deliveryAddress: {

name: 'Roger', //modified name

street: 'Node street 1',

postalCode: '7059',

postalPlace: 'Jakobsli',

countryCode: 'NO'

},

lines: [

{ id: 1, product: 'Bicycle', amount: 250 },

{ id: 2, product: 'Small guitar', amount: 150 },

{ product: 'Piano', amount: 800 } //the new line to be inserted

]

};

const order = await db.order.update(modified, {customer: true, deliveryAddress: true, lines: true});

}Partially updating from JSON

The updateChanges method applies a partial update based on difference between original and modified row. It is often preferable because it minimizes the risk of unintentionally overwriting data that may have been altered by other users in the meantime. To do so, you need to pass in the original row object before modification as well.

import map from './map';

const db = map.sqlite('demo.db');

update();

async function update() {

const original = {

id: 1,

orderDate: '2023-07-14T12:00:00',

customer: {

id: 2

},

deliveryAddress: {

id: 1,

name: 'George',

street: 'Node street 1',

postalCode: '7059',

postalPlace: 'Jakobsli',

countryCode: 'NO'

},

lines: [

{ id: 1, product: 'Bicycle', amount: 250 },

{ id: 2, product: 'Small guitar', amount: 150 }

]

};

const modified = JSON.parse(JSON.stringify(original));

deliveryAddress.name = 'Roger';

modified.lines.push({ product: 'Piano', amount: 800 });

const order = await db.order.updateChanges(modified, original, { customer: true, deliveryAddress: true, lines: true });

}Conflict resolution

Rows get updated using an optimistic concurrency approach by default. This means if a property being edited was meanwhile altered, an exception is raised, indicating the row was modified by a different user. You can change the concurrency strategy either at the table or column level.

Currently, there are three concurrency strategies:

optimisticRaises an exception if another user changes the property during an update.overwriteOverwrites the property, regardless of changes by others.skipOnConflictSilently avoids updating the property if another user has modified it in the interim.

In the example below, we've set the concurrency strategy for orderDate to 'overwrite'. This implies that if other users modify orderDate while you're making changes, their updates will be overwritten.

import map from './map';

const db = map.sqlite('demo.db');

update();

async function update() {

const order = await db.order.getById(1, {

customer: true,

deliveryAddress: true,

lines: true

});

order.orderDate = new Date();

order.deliveryAddress = null;

order.lines.push({product: 'Cloak of invisibility', amount: 600});

await order.saveChanges( {

orderDate: {

concurrency: 'overwrite'

}});

}Currently, there are three concurrency strategies:

optimisticRaises an exception if another row was already inserted on that primary key.overwriteOverwrites the property, regardless of changes by others.skipOnConflictSilently avoids updating the property if another user has modified it in the interim.

The concurrency option can be set either for the whole table or individually for each column. In the example below, we've set the concurrency strategy on vendor table to overwrite except for the column balance which uses the skipOnConflict strategy. In this particular case, a row with id: 1 already exists, the name and isActive fields will be overwritten, but the balance will remain the same as in the original record, demonstrating the effectiveness of combining multiple concurrency strategies.

import map from './map';

const db = map.sqlite('demo.db');

insertRows();

async function insertRows() {

db2 = db({

vendor: {

balance: {

concurrency: 'skipOnConflict'

},

concurrency: 'overwrite'

}

});

await db2.vendor.insert({

id: 1,

name: 'John',

balance: 100,

isActive: true

});

//this will overwrite all fields but balance

const george = await db2.vendor.insert({

id: 1,

name: 'George',

balance: 177,

isActive: false

});

console.dir(george, {depth: Infinity});

// {

// id: 1,

// name: 'George',

// balance: 100,

// isActive: false

// }

}Deleting a single row

import map from './map';

const db = map.sqlite('demo.db');

deleteRow();

async function deleteRow() {

const order = await db.order.getById(1);

await order.delete();

//will also delete deliveryAddress and lines

//but not customer

}Deleting a row in an array

A common workflow involves retrieving multiple rows, followed by the need to delete a specific row from an array. This operation is straightforward to do with RDB, which allow for the updating, inserting, and deleting of multiple rows in a single transaction. To modify the array, simply add, update, or remove elements, and then invoke the saveChanges() method on the array to persist the changes.

import map from './map';

const db = map.sqlite('demo.db');

updateInsertDelete();

async function updateInsertDelete() {

const orders = await db.order.getAll({

customer: true,

deliveryAddress: true,

lines: true

});

//will add line to the first order

orders[0].lines.push({

product: 'secret weapon',

amount: 355

});

//will delete second row

orders.splice(1, 1);

//will insert a new order with lines, deliveryAddress and set customerId

orders.push({

orderDate: new Date(2022, 0, 11, 9, 24, 47),

customer: {

id: 1

},

deliveryAddress: {

name: 'George',

street: 'Node street 1',

postalCode: '7059',

postalPlace: 'Jakobsli',

countryCode: 'NO'

},

lines: [

{ product: 'Magic tent', amount: 349 }

]

});

await orders.saveChanges();

}Deleting many rows

import map from './map';

const db = map.sqlite('demo.db');

deleteRows();

async function deleteRows() {

let orders = await db.order.getAll({

where: x => x.customer.name.eq('George')

});

await orders.delete();

}Deleting with concurrency

Concurrent operations can lead to conflicts. When you still want to proceed with the deletion regardless of potential interim changes, the 'overwrite' concurrency strategy can be used. This example demonstrates deleting rows even if the "delivery address" has been modified in the meantime. You can read more about concurrency strategies in Updating rows.

import map from './map';

const db = map.sqlite('demo.db');

deleteRows();

async function deleteRows() {

let orders = await db.order.getAll({

where: x => x.deliveryAddress.name.eq('George'),

customer: true,

deliveryAddress: true,

lines: true

});

await orders.delete({

deliveryAddress: {

concurrency: 'overwrite'

}

});

}Batch delete

When removing a large number of records based on a certain condition, batch deletion can be efficient.

However, it's worth noting that batch deletes don't follow the cascade delete behavior by default. To achieve cascading in batch deletes, you must explicitly call the deleteCascade method.

import map from './map';

const db = map.sqlite('demo.db');

deleteRows();

async function deleteRows() {

const filter = db.order.deliveryAddress.name.eq('George');

await db.order.delete(filter);

}Batch delete cascade

When deleting records, sometimes associated data in related tables also needs to be removed. This cascade delete helps maintain database integrity.

import map from './map';

const db = map.sqlite('demo.db');

deleteRows();

async function deleteRows() {

const filter = db.order.deliveryAddress.name.eq('George');

await db.order.deleteCascade(filter);

}Batch delete by primary key

For efficiency, you can also delete records directly if you know their primary keys.

import map from './map';

const db = map.sqlite('demo.db');

deleteRows();

async function deleteRows() {

db.customer.delete([{id: 1}, {id: 2}]);

}📄 server.ts

import map from './map';

import { json } from 'body-parser';

import express from 'express';

import cors from 'cors';

const db = map.sqlite('demo.db');

express().disable('x-powered-by')

.use(json({ limit: '100mb' }))

.use(cors())

//for demonstrational purposes, authentication middleware is not shown here.

.use('/rdb', db.express())

.listen(3000, () => console.log('Example app listening on port 3000!'));📄 browser.ts

import map from './map';

const db = map.http('http://localhost:3000/rdb');

updateRows();

async function updateRows() {

const order = await db.order.getOne(undefined, {

where: x => x.lines.any(line => line.product.startsWith('Magic wand'))

.and(x.customer.name.startsWith('Harry'),

lines: true

});

order.lines.push({

product: 'broomstick',

amount: 300,

});

await order.saveChanges();

}Interceptors and base filter

In the next setup, axios interceptors are employed on the client side to add an Authorization header of requests. Meanwhile, on the server side, an Express middleware (validateToken) is utilized to ensure the presence of the Authorization header, while a base filter is applied on the order table to filter incoming requests based on the customerId extracted from this header. This combined approach enhances security by ensuring that users can only access data relevant to their authorization level and that every request is accompanied by a token. In real-world applications, it's advisable to use a more comprehensive token system and expand error handling to manage a wider range of potential issues.

One notable side effect compared to the previous example, is that only the order table is exposed for interaction, while all other potential tables in the database remain shielded from direct client access (except for related tables). If you want to expose a table without a baseFilter, just set the tableName to an empty object.

📄 server.ts

import map from './map';

import { json } from 'body-parser';

import express from 'express';

import cors from 'cors';

const db = map.sqlite('demo.db');

express().disable('x-powered-by')

.use(json({ limit: '100mb' }))

.use(cors())

.use('/rdb', validateToken)

.use('/rdb', db.express({

order: {

baseFilter: (db, req, _res) => {

const customerId = Number.parseInt(req.headers.authorization.split(' ')[1]); //Bearer 2

return db.order.customerId.eq(Number.parseInt(customerId));

}

}

}))

.listen(3000, () => console.log('Example app listening on port 3000!'));

function validateToken(req, res, next) {

// For demo purposes, we're just checking against existence of authorization header

// In a real-world scenario, this would be a dangerous approach because it bypasses signature validation

const authHeader = req.headers.authorization;

if (authHeader)

return next();

else

return res.status(401).json({ error: 'Authorization header missing' });

}📄 browser.ts

import map from './map';

const db = map.http('http://localhost:3000/rdb');

updateRows();

async function updateRows() {

db.interceptors.request.use((config) => {

// For demo purposes, we're just adding hardcoded token

// In a real-world scenario, use a proper JSON web token

config.headers.Authorization = 'Bearer 2' //customerId

return config;

});

db.interceptors.response.use(

response => response,

(error) => {

if (error.response && error.response.status === 401) {

console.dir('Unauthorized, dispatch a login action');

//redirectToLogin();

}

return Promise.reject(error);

}

);

const order = await db.order.getOne(undefined, {

where: x => x.lines.any(line => line.product.startsWith('Magic wand'))

.and(db.order.customer.name.startsWith('Harry')),

lines: true

});

order.lines.push({

product: 'broomstick',

amount: 300

});

await order.saveChanges();

}Including a relation

This example fetches orders and their corresponding delivery addresses, including all columns from both entities.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

deliveryAddress: true

});

}Including a subset of columns

In scenarios where only specific fields are required, you can specify a subset of columns to include. In the example below, orderDate is explicitly excluded, so all other columns in the order table are included by default. For the deliveryAddress relation, only countryCode and name are included, excluding all other columns. If you have a mix of explicitly included and excluded columns, all other columns will be excluded from that table.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

orderDate: false,

deliveryAddress: {

countryCode: true,

name: true

}

});

}Equal

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.customer.getAll({

where x => x.name.equal('Harry')

});

}Not equal

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.customer.getAll({

where x => x.name.notEqual('Harry')

});

}Contains

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.customer.getAll({

where: x => x.name.contains('arr')

});

}Starts with

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const filter = db.customer.name.startsWith('Harr');

const rows = await db.customer.getAll({

where: x => x.name.startsWith('Harr')

});

}Ends with

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.customer.getAll({

where: x => x.name.endsWith('arry')

});

}Greater than

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: x => x.orderDate.greaterThan('2023-07-14T12:00:00')

});

}Greater than or equal

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: x => x.orderDate.greaterThanOrEqual('2023-07-14T12:00:00')

});

}Less than

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: x => x.orderDate.lessThan('2023-07-14T12:00:00')

});

}Less than or equal

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: x => x.orderDate.lessThanOrEqual('2023-07-14T12:00:00')

});

}Between

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: x => x.orderDate.between('2023-07-14T12:00:00', '2024-07-14T12:00:00')

});

}In

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: x => x.customer.name.in('George', 'Harry')

});

}Raw sql filter

You can use the raw SQL filter alone or in combination with a regular filter.

Here the raw filter queries for customer with name ending with "arry". The composite filter combines the raw SQL filter and a regular filter that checks for a customer balance greater than 100. It is important to note that due to security precautions aimed at preventing SQL injection attacks, using raw SQL filters directly via browser inputs is not allowed. Attempting to do so will result in an HTTP status 403 (Forbidden) being returned.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rawFilter = {

sql: 'name like ?',

parameters: ['%arry']

};

const rowsWithRaw = await db.customer.getAll({

where: () => rawFilter

});

const rowsWithCombined = await db.customer.getAll({

where: x => x.balance.greaterThan(100).and(rawFilter)

});

}import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const orders = await db.order.getAll({

lines: {

where: x => x.product.contains('broomstick')

},

deliveryAddress: true,

customer: true

});

}And

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: x => x.customer.name.equal('Harry')

.and(x.orderDate.greaterThan('2023-07-14T12:00:00'))

});

}Or

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: y => y.customer( x => x.name.equal('George')

.or(x.name.equal('Harry')))

});

}Not

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

//Neither George nor Harry

const rows = await db.order.getAll({

where: y => y.customer(x => x.name.equal('George')

.or(x.name.equal('Harry')))

.not()

});

}Exists

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: x => x.deliveryAddress.exists()

});

}Any

The any operator is employed when the objective is to find records where at least one item in a collection meets the specified criteria.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const filter = db.order.lines.any(x => x.product.contains('guitar'));

//equivalent syntax:

// const filter = db.order.lines.product.contains('guitar');

const rows = await db.order.getAll({

where: y => y.lines.any(x => x.product.contains('guitar'))

});

}All

Conversely, the all operator ensures that every item in a collection adheres to the defined condition.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: y => y.lines.all(x => x.product.contains('a'))

});

}None

The none operator, as the name suggests, is used to select records where not a single item in a collection meets the condition.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const rows = await db.order.getAll({

where: y => y.lines.none(x => x.product.equal('Magic wand'))

});

}import map from './map';

const db = map.sqlite('demo.db');

execute();

async function execute() {

await db.transaction(async tx => {

const customer = await tx.customer.getById(1);

customer.balance = 100;

await customer.saveChanges();

throw new Error('This will rollback');

});

}stringmaps to VARCHAR or TEXT in sqlnumericmaps to INTEGER, DECIMAL, NUMERIC, TINYINT FLOAT/REAL or DOUBLE in sql.booleanmaps to BIT, TINYINT(1) or INTEGER in sql.uuidis represented as string in javascript and maps to UUID, GUID or VARCHAR in sql.dateis represented as ISO 8601 string in javascript and maps to DATE, DATETIME, TIMESTAMP or DAY in sql. Representing datetime values as ISO 8601 strings, rather than relying on JavaScript's native Date object, has multiple advantages, especially when dealing with databases and servers in different time zones. The datetime values are inherently accompanied by their respective time zones. This ensures that the datetime value remains consistent regardless of where it's being viewed or interpreted. On the other hand, JavaScript's Date object is typically tied to the time zone of the environment in which it's executed, which could lead to inconsistencies between the client and the database server.dateWithTimeZoneis represented as ISO 8601 string in javascript and maps to TIMESTAMP WITH TIME ZONE in postgres and DATETIMEOFFSET in ms sql. Contrary to what its name might imply, timestamptz (TIMESTAMP WITH TIME ZONE) in postgres doesn't store the time zone data. Instead, it adjusts the provided time value to UTC (Coordinated Universal Time) before storing it. When a timestamptz value is retrieved, PostgreSQL will automatically adjust the date-time to the time zone setting of the PostgreSQL session (often the server's timezone, unless changed by the user). The primary benefit of DATETIMEOFFSET in ms sql is its ability to keep track of the time zone context. If you're dealing with global applications where understanding the original time zone context is critical (like for coordinating meetings across time zones or logging events), DATETIMEOFFSET is incredibly valuable.binaryis represented as a base64 string in javascript and maps to BLOB, BYTEA or VARBINARY(max) in sql.jsonandjsonOf<T>are represented as an object or array in javascript and maps to JSON, JSONB, NVARCHAR(max) or TEXT (sqlite) in sql.

📄 map.ts

import rdb from 'rdb';

interface Pet {

name: string;

kind: string;

}

const map = rdb.map(x => ({

demo: x.table('demo').map(x => ({

id: x.column('id').uuid().primary().notNull(),

name: x.column('name').string(),

balance: x.column('balance').numeric(),

regularDate: x.column('regularDate').date(),

tzDate: x.column('tzDate').dateWithTimeZone(),

picture: x.column('picture').binary(),

pet: x.column('pet').jsonOf<Pet>(), //generic

pet2: x.column('pet2').json(), //non-generic

}))

}));📄 map.js

import rdb from 'rdb';

/**

* @typedef {Object} Pet

* @property {string} name - The name of the pet.

* @property {string} kind - The kind of pet

*/

/** @type {Pet} */

let pet;

const map = rdb.map(x => ({

demo: x.table('demo').map(x => ({

id: x.column('id').uuid().primary().notNull(),

name: x.column('name').string(),

balance: x.column('balance').numeric(),

regularDate: x.column('regularDate').date(),

tzDate: x.column('tzDate').dateWithTimeZone(),

picture: x.column('picture').binary(),

pet: x.column('pet').jsonOf(pet), //generic

pet2: x.column('pet2').json(), //non-generic

}))

}));In the provided code, the id column's default value is set to a UUID generated by crypto.randomUUID(), and the isActive column's default is set to true.

import rdb from 'rdb';

import crypto 'crypto';

const map = rdb.map(x => ({

myTable: x.table('myTable').map(({ column }) => ({

id: column('id').uuid().primary().default(() => crypto.randomUUID()),

name: column('name').string(),

balance: column('balance').numeric(),

isActive: column('isActive').boolean().default(true),

}))

}));

export default map;📄 map.ts

import rdb from 'rdb';

interface Pet {

name: string;

kind: string;

}

let petSchema = {

"properties": {

"name": { "type": "string" },

"kind": { "type": "string" }

}

};

function validateName(value?: string) {

if (value && value.length > 10)

throw new Error('Length cannot exceed 10 characters');

}

const map = rdb.map(x => ({

demo: x.table('demo').map(x => ({

id: x.column('id').uuid().primary().notNullExceptInsert(),

name: x.column('name').string().validate(validateName),

pet: x.column('pet').jsonOf<Pet>().JSONSchema(petSchema)

}))

}));

export default map;📄 map.js

import rdb from 'rdb';

/**

* @typedef {Object} Pet

* @property {string} name - The name of the pet.

* @property {string} kind - The kind of pet

*/

/** @type {Pet} */

let pet;

let petSchema = {

"properties": {

"name": { "type": "string" },

"kind": { "type": "string" }

}

};

function validateName(value) {

if (value && value.length > 10)

throw new Error('Length cannot exceed 10 characters');

}

const map = rdb.map(x => ({

demo: x.table('demo').map(x => ({

id: x.column('id').uuid().primary().notNullExceptInsert(),

name: x.column('name').string().validate(validateName),

pet: x.column('pet').jsonOf(pet).JSONSchema(petSchema)

}))

}));

export default map;Consider a scenario where we have orders and order lines, and each order line is uniquely identified by combining the order type, order number, and line number.

import rdb from 'rdb';

const map = rdb.map(x => ({

order: x.table('_order').map(({ column }) => ({

orderType: column('orderType').string().primary().notNull(),

orderNo: column('orderNo').numeric().primary().notNull(),

orderDate: column('orderDate').date().notNull(),

})),

orderLine: x.table('orderLine').map(({ column }) => ({

orderType: column('orderType').string().primary().notNull(),

orderNo: column('orderNo').numeric().primary().notNull(),

lineNo: column('lineNo').numeric().primary().notNull(),

product: column('product').string(),

}))

})).map(x => ({

order: x.order.map(v => ({

lines: v.hasMany(x.orderLine).by('orderType', 'orderNo'),

}))

}));

export default map;import rdb from 'rdb';

const map = rdb.map(x => ({

customer: x.table('client').map(({ column }) => ({

id: column('id').numeric().primary(),

name: column('name').string()

})).columnDiscriminators(`client_type='customer'`),

vendor: x.table('client').map(({ column }) => ({

id: column('id').numeric().primary(),

name: column('name').string()

})).columnDiscriminators(`client_type='vendor'`),

}));

export default map;In the example below, the formula discriminator categorize bookings into customerBooking and internalBooking within the same booking table. The categorization is based on the value of the booking_no column. For customerBooking, records are identified where the booking number falls within the range of 10000 to 99999. For internalBooking, the range is between 1000 to 9999. These conditions are utilized during fetch and delete operations to ensure that the program interacts with the appropriate subset of records according to their booking number. Unlike column discriminators, formula discriminators are not used during insert operations since they rely on existing data to evaluate the condition.

The '@this' acts as a placeholder within the formula. When RDB constructs a query, it replaces '@this' with the appropriate alias for the table being queried. This replacement is crucial to avoid ambiguity, especially when dealing with joins with ambigious column names.

import rdb from 'rdb';

const map = rdb.map(x => ({

customerBooking: x.table('booking').map(({ column }) => ({

id: column('id').uuid().primary(),

bookingNo: column('booking_no').numeric()

})).formulaDiscriminators('@this.booking_no between 10000 and 99999'),

internalBooking: x.table('booking').map(({ column }) => ({

id: column('id').uuid().primary(),

bookingNo: column('booking_no').numeric()

})).formulaDiscriminators('@this.booking_no between 1000 and 9999'),

}));

export default map;import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const query = {

sql: 'select * from customer where name like ?',

parameters: ['%arry']

};

const rows = await db.query(query)

}You can count records and aggregate numerical columns. This can either be done across rows or separately for each row.

Supported functions include:

- count

- sum

- min

- max

- avg

On each row

In this example, we are counting the number of lines calculating total amount for each order. This is represented as the property numberOfLines. You can call these aggregated properties whatever you want.

You can also elevate associated data to the a parent level for easier access. In the example below, balance of the customer is elevated to the root level.

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const orders = await db.order.getAll({

numberOfLines: x => x.count(x => x.lines.id),

totalAmount: x => x.sum(x => lines.amount),

balance: x => x.customer.balance

});

}Across all rows

The aggregate function effeciently groups data together.

In this particular example , for each customer, it counts the number of lines associated with their orders and calculates the total amount of these lines.

Under the hood, it will run an sql group by customerId and customerName.

import map from './map';

const db = map.sqlite('demo.db');

getAggregates();

async function getAggregates() {

const orders = await db.order.aggregate({

where: x => x.orderDate.greaterThan(new Date(2022, 0, 11, 9, 24, 47)),

customerId: x => x.customerId,

customerName: x => x.customer.name,

numberOfLines: x => x.count(x => x.lines.id),

totals: x => x.sum(x => lines.amount)

});

}Count

For convenience, you can use the count directly on the table instead of using the aggregated query syntax.

import map from './map';

const db = map.sqlite('demo.db');

getCount();

async function getCount() {

const filter = db.order.lines.any(

line => line.product.contains('broomstick')

);

const count = await db.order.count(filter);

console.log(count); //2

}📄 map.ts

import rdb from 'rdb';

const map = rdb.map(x => ({

customer: x.table('customer').map(({ column }) => ({

id: column('id').numeric().primary().notNullExceptInsert(),

name: column('name').string(),

balance: column('balance').numeric().serializable(false),

isActive: column('isActive').boolean(),

}))

}));

export default map;📄 sensitive.ts

import map from './map';

const db = map.sqlite('demo.db');

getRows();

async function getRows() {

const george = await db.customer.insert({

name: 'George',

balance: 177,

isActive: true

});

consol2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

2 years ago

3 years ago

3 years ago

3 years ago

2 years ago

3 years ago

2 years ago

2 years ago

2 years ago

3 years ago

3 years ago

3 years ago

2 years ago

2 years ago

2 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

3 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

4 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

5 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

6 years ago

7 years ago

7 years ago

7 years ago

7 years ago

7 years ago

7 years ago

7 years ago

7 years ago

7 years ago

7 years ago

7 years ago

7 years ago

8 years ago

8 years ago

8 years ago

8 years ago

8 years ago

8 years ago

8 years ago

9 years ago

9 years ago

9 years ago

9 years ago

9 years ago

9 years ago

9 years ago

9 years ago

9 years ago

9 years ago

9 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

10 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

11 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago

12 years ago