@ccssmnn/md-ai v0.5.0

Markdown AI

A command-line tool and library for agentic coding using markdown in your own $EDITOR.

Markdown AI enables seamless interaction with large language models (LLMs) directly from your terminal and favorite editor, using markdown for storing the chat.

Why does this exist?

- LLM chat editing experience in web and editor UIs is bad.

- Editing files in your editor is good.

- LLMs naturally respond in markdown.

- Markdown editors come with syntax highlighting and rich editing features.

- Runs locally on your machine, uses your API keys, and can leverage local tools.

Features

- Entire chat history stored as a single markdown file.

- Chat with the LLM in the terminal.

- Open your editor from the CLI to edit the entire chat history and continue after closing the editor.

- Built-in tools for listing, reading, searching, and writing files, with permission prompts for writing.

- Support for custom system prompts.

- Library mode for custom models, tools, and integration with MCP servers via MCP Tools.

Getting Started

npm install -g @ccssmnn/md-aiSet your API key (example for Google Generative AI):

export GOOGLE_GENERATIVE_AI_API_KEY=your_api_keyStart a chat session:

md-ai chat.mdTools

listFiles: Lists files in the working directory.readFiles: Reads file contents.writeFiles: Writes content to files with permission prompts.grepSearch: Searches text in files using grep.execCommand: Executes shell commands with permission prompts.fetchUrlContent: Fetches and extracts relevant text content from a given URL. Supports optional filtering of extracted links by regex patterns (only links matching all patterns are included) and limiting the number of returned links. Returns the main content and a list of meaningful links with descriptions to help navigate the page.

Usage

CLI

md-ai <chat.md> [options]Options:

--config <path>Custom config file path.--show-configShow the final configuration.-s, --system <path>Path to system prompt file.-m, --model <provider:model>AI model to use (default: google:gemini-2.0-flash).-e, --editor <cmd>Editor command (default: $EDITOR or 'vi +99999').-c, --cwd <path>Working directory for file tools.--no-toolsDisable all tools (pure chat mode).--no-compressionDisable compression for tool call/result fences.

Editor

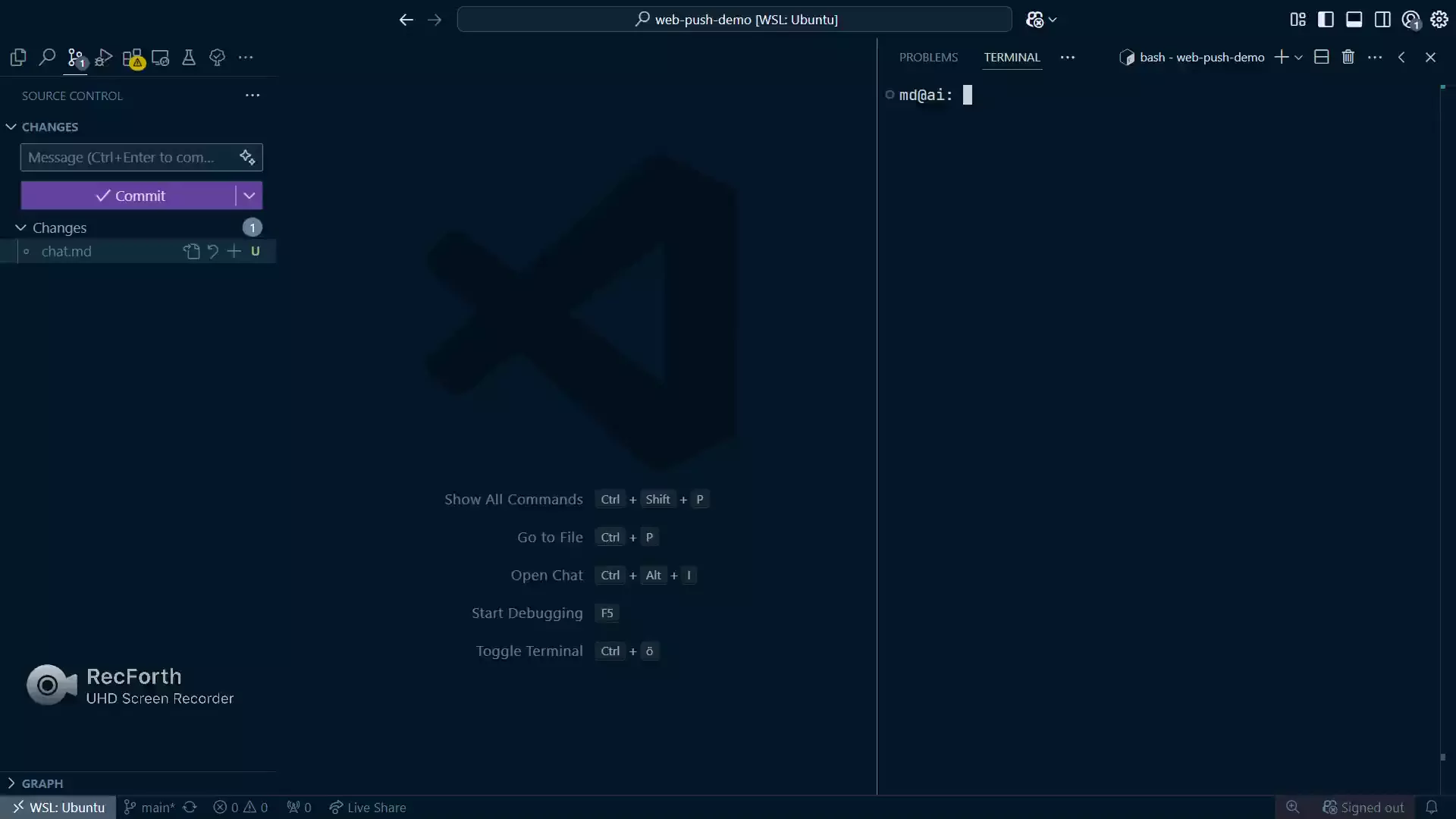

VS Code

Set the editor command:

md-ai -e 'code --wait' chat.mdOr in config file:

{ "editor": "code --wait" }Recommended Shortcuts:

- Symbol Search (

ctrl+shift+o): Navigate chat history via headings. - Jump to End (

ctrl+end): Quickly jump to the end of the chat. - Save (

ctrl+s) and Close (ctrl+w): Save and close the chat file to trigger the AI call. - Focus Terminal (

ctrl+alt+j): Focus terminal panel. - Focus Editor (

ctrl+1): Jump back to editor. - Toggle Panel (

ctrl+j): Show/hide terminal panel. - Maximize Panel (

ctrl+shift+j): Maximize terminal panel.

Library

Use as a library with custom tools and models:

import { google } from "@ai-sdk/google";

import { runMarkdownAI, tools } from "@ccssmnn/md-ai";

await runMarkdownAI({

path: "./chat.md",

editor: "code --wait",

ai: {

model: google("gemini-2.0-flash"),

system: "You are a helpful assistant.",

tools: {

readFiles: tools.createReadFilesTool({ cwd: "./" }),

listFiles: tools.createListFilesTool({ cwd: "./" }),

writeFiles: tools.createWriteFilesTool({ cwd: "./" }),

grepSearch: tools.createGrepSearchTool({ cwd: "./" }),

execCommand: tools.createExecCommandTool({ cwd: "./", alwaysAllow: [] }),

},

},

});Configuration

Markdown AI supports a config.json file (default location: ~/.config/md-ai/config.json). CLI flags override config file settings.

Example config.json:

{

"model": "openai:gpt-4o",

"editor": "code --wait",

"system": "path/to/system-prompt.md"

}How does it work?

The chat is serialized into markdown after each AI invocation. You can edit the markdown file directly, and it will be parsed into messages before sending to the AI.

Example markdown chat snippet:

## User

call the tool for me

## Assistant

will do!

\`\`\`tool-call

{

"toolCallId": "1234",

"toolName": "myTool",

"args": { "msg": "hello tool" }

}

\`\`\`

## Tool

\`\`\`tool-result

{

"toolCallId": "1234",

"toolName": "myTool",

"result": { "response": "hello agent" }

}

\`\`\`This is parsed into structured messages for the AI.

License

MIT